SpaCy For Entity Extraction

The first spaCy version was released in 2015 and it quickly became a standard framework for enterprise grade entity extraction (also know as NER).

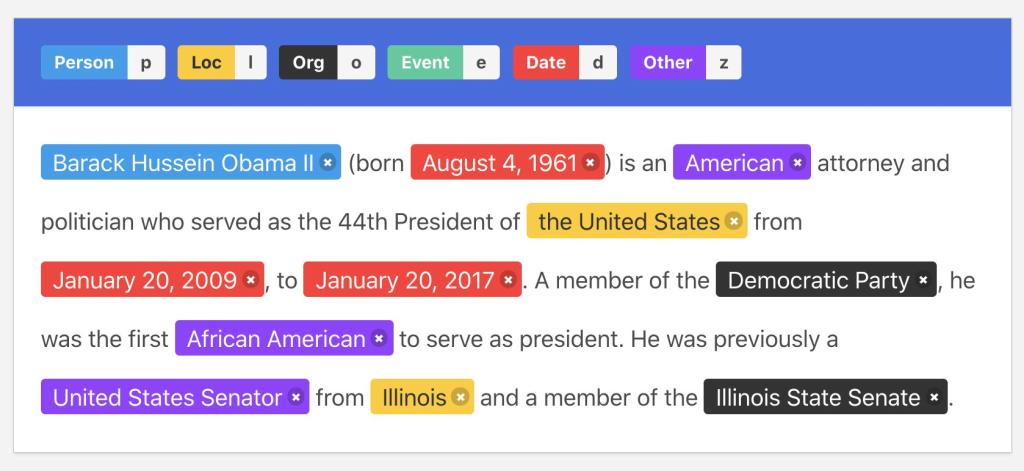

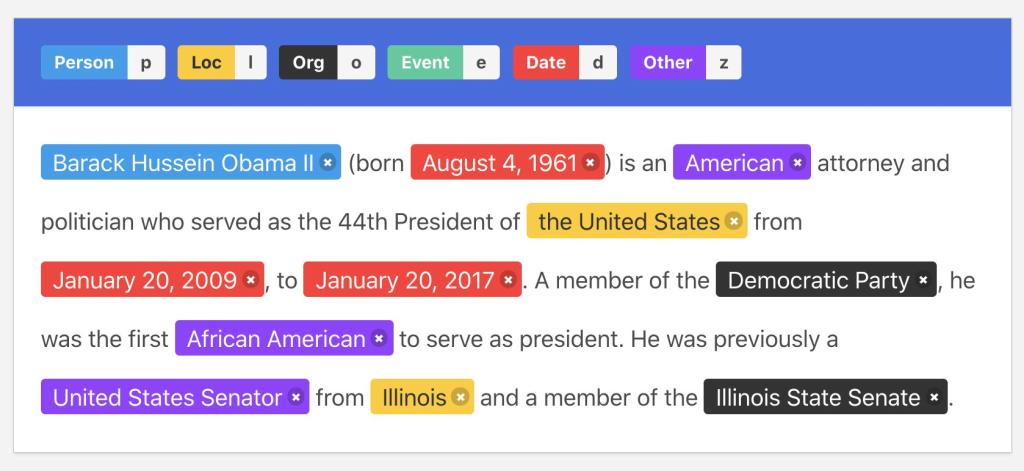

If you have a piece of unstructured text (coming from the web for example) and you want to extract structured data from it, like dates, names, places, etc. spaCy is a very good solution.

SpaCy is interesting because several pre-trained models are available in around 20 languages (see more here). It means that you do not necessarily have to train your own model for entity extraction. It also means that, if you want to train your own model, you can start from a pre-trained model instead of starting from scratch, which might save you a lot of time.

SpaCy is considered as a "production grade" framework because it is very fast, reliable, and comes with a comprehensive documentation.

However if the default entities supported by spaCy pre-trained models are not enough, you will need to work on "data annotation" (also known as "data labelling") in order to train your own model. This process is extremely time consuming and many enterprise entity extraction projects fail because of this challenge.

Let's say that you want to extract job titles from a piece of text (from a resume for example, or from a company web page). As spaCy pre-trained models do not support such an entity by default, you will need to teach spaCy how to recognize job titles. You will need to create a training dataset that contains several thousands of job titles extractions examples (and maybe even many more!). You may use a paid annotation software like Prodigy (made by the spaCy team), but it still involves a lot of human work. It is actually quite common to see companies hire a bunch of contractors for several months in order to carry out a data annotation project. Such a job is so repetitive and boring that the resulting datasets often contain a lot of mistakes...

Data Annotation Example

Data Annotation ExampleLet's see which alternative solutions you could try in 2023!