John Doe has been working for the Microsoft company in Seattle since 1999.

What are noun chunks / noun phrases?

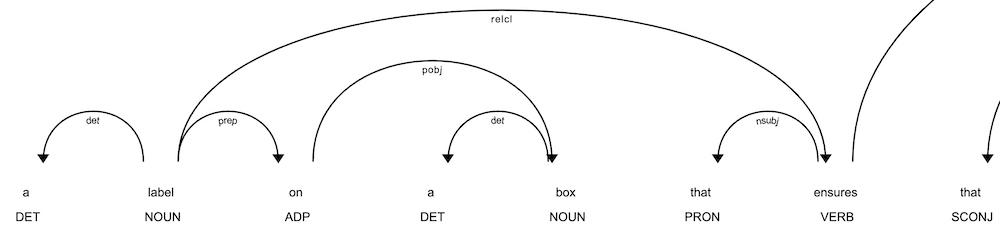

Noun chunks is a core feature of Natural Language Processing. They are known as "noun phrases" in linguistics. Basicall they are nouns and all the words that depend on these nouns.

For example, let's say you have the following sentence:

Here are the noun chunks from this sentence:

- "John Doe" (Root text: "Doe" / Root dependency: "nsubj" / Root head text: "working")

- "the Microsoft company" (Root text: "company" / Root dependency: "pobj" / Root head text: "for")

- "Seattle" (Root text: "Seattle" / Root dependency: "pobj" / Root head text: "in")