Article by Rafał Rybnik, Head of Software Development at Instytut Badań Pollster

Unless stated otherwise, all pictures in the article are by the author.

Article by Rafał Rybnik, Head of Software Development at Instytut Badań Pollster

Unless stated otherwise, all pictures in the article are by the author.

In today’s online advertising reality, effective marketing tactics rely on a variety of user tracking techniques, such as third-party cookies (and alternative storages) and device fingerprinting. But in a world of data leaks, GDPR, CCPA and increased data protection legislation inspired by those, this approach becomes obsolete. Safari and Firefox already built-in solutions to reduce cross-site tracking. Chrome also works on alternatives. So, the end of third-party cookies is soon. Apple’s Identifier for Advertisers (IDFA) soon will be accessible only for apps with explicit consent from the user. The disappearance of the possibility of cross-domain tracking makes advertisers return to contextual advertising.

In this article, I show you how to implement context targeting based on the Text Classification API provided by NLP Cloud. The approach described here can be easily adapted to any advertising technologies (such as ad servers, OpenRTB etc.).

Because advertisers won’t be able to target individual users by using third-party cookies, an easy prediction is contextual advertising campaigns rising again. This could be the only way to target user interests on a large enough scale. Contextual ads are based on content which the user is looking at right now, instead of their browser history or behavioral profile.

(picture from What Is Contextual

Advertising?)

It is supposed to be more interesting for users, as they’ll see ads that match with the topic of the website pages that they are visiting.

Most ad serving technologies and ad networks support the passing of keywords or tags during the ad serving codes. Text is the core of the web and can be an extremely rich source of information. However, extracting context, tags and keywords from it, e.g. for advertisement or recommendation purposes, can be hard and time-consuming. But if you are the owner of even a medium-sized news site, beyond a few tags allocated by the editorial team, it will be difficult to extract all the relevant topics.

First attempts to automate this process have resulted in more or less hilarious screw-ups in the past:

(picture from Bad Ad Placements Funny,

If Not Yours)

Fortunately, advances in Natural Language Processing allow for much more accurate matches, in less time. Text classification is the assignment of categories or labels consistent with text content.

Let’s consider an example page with articles on a variety of topics:

Our goal is to have ad placements display banners thematically related to the article content.

Conditions that our solution must meet:

Note that advertising systems and web development are outside the scope of this article, but the general concepts remain the same regardless of the tools and technologies used.

My preferred solution in such cases is to separate the logic that handles text classification into a separate API. We have two options: create it ourselves or use a ready-made solution.

Preparing a simple text classification engine using Python and Natural Language Processing libraries is a task for one afternoon. But the problem arises in terms of accuracy and serving increased traffic. We need to somehow handle the growing user base and their clickstream.

If you are a website owner, you are unlikely to want to play with the machine learning models tuning and evaluation. So we will delegate as much as we can to an external solution. Note that we do not plan to send any user data here, only data belonging to the website. This makes the use of external contextual targeting tools much simpler from a user privacy perspective.

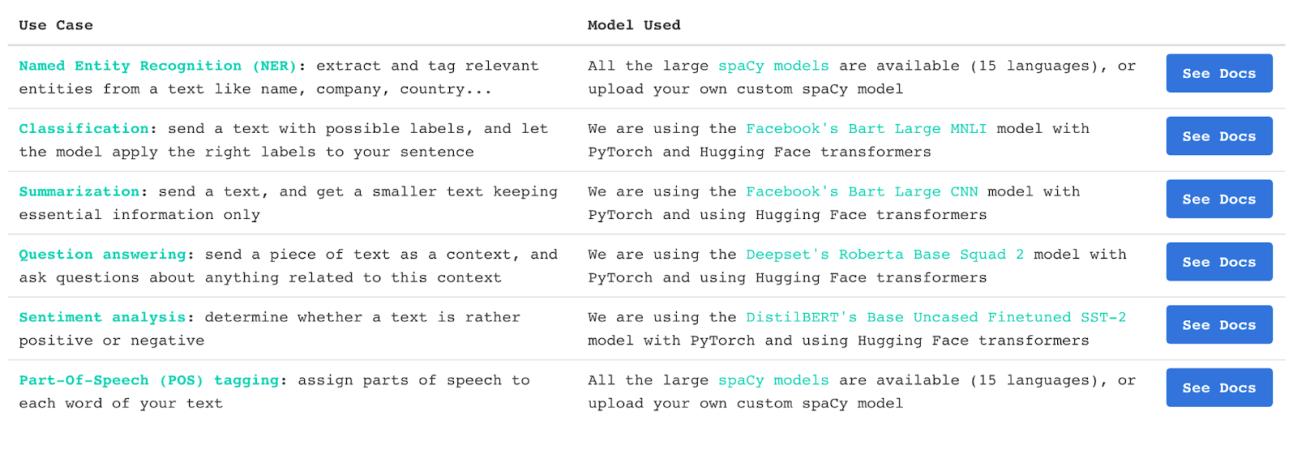

NLP Cloud is a provider of multiple APIs for text processing using machine learning models. One of these is the text classifier, which looks promising in terms of simple implementation (see docs).

With the NLP Cloud API, you can try out which algorithm might be useful for a particular business case.

As the backend of the website is Python-based (Flask), we start by writing a simple client to the Natural Language Processing API:

import pandas as pd

import requests

import json

class TextClassification:

def __init__(self, key, base='https://api.nlpcloud.io/v1/bart-large-mnli',):

self.base = base

self.headers = {

"accept": "application/json",

"content-type": "application/json",

"Authorization": f"Token {key}"

}

def get_keywords(self, text, labels):

url = f"{self.base}/classification"

payload = {

"text":text,

"labels":labels,

"multi_class": True

}

response = requests.request("POST", url, json=payload, headers=self.headers)

result = []

try:

result = dict(zip(response.json()['labels'], response.json()['scores']))

except:

pass

return result

tc = TextClassification(key='APIKEY')

print(

tc.get_keywords(

"Football is a family of team sports that involve, to varying degrees, kicking a ball to score a goal. Unqualified, the word football normally means the form of football that is the most popular where the word is used. Sports commonly called football include association football (known as soccer in some countries); gridiron football (specifically American football or Canadian football); Australian rules football; rugby football (either rugby union or rugby league); and Gaelic football.[1][2] These various forms of football share to varying extent common origins and are known as football codes.",

["football", "sport", "cooking", "machine learning"]

)

)Results:

{

'labels': [

'sport',

'football',

'machine learning',

'cooking'

],

'scores': [

0.9651273488998413,

0.938549280166626,

0.013061746023595333,

0.0016104158712550998

]

}Pretty good. Each label is assigned its relevance to the topic with no effort.

The plan is that the selection of banners to be displayed will be done by an ad serving system (decision will be based on the scores of the individually assigned labels). Therefore, in order not to expose the API keys and to have more control over the data, we will write a simple proxy:

@app.route('/get-labels',methods = ['POST'])

def get_labels():

if request.method == 'POST':

try:

return tc.get_keywords(request.json['text'], request.json['labels'])

except:

return []Let’s assume we have 3 ad campaigns to run:

Insurance company (keyword: insurance)

Renewable energy company (keyword: renewables)

Hairdresser (keyword: good look)

Let’s sketch a mechanism on the front-end, which will manage the display of an appropriate creative.

function displayAd(keyword, placement_id) {

var conditions = {

false: ' ',

"insurance": '

',

"insurance": ' ',

"renewables": '

',

"renewables": ' ',

"good look": '

',

"good look": ' '

}

var banner = document.querySelector(placement_id);

banner.innerHTML = conditions[keyword];

}

'

}

var banner = document.querySelector(placement_id);

banner.innerHTML = conditions[keyword];

}This is our adserver 🤪

Now using fetch, we will retrieve labels for the text of an article, that we get using its selector:

var text = document.querySelector("#article").textContent;

var labels = ["insurance", "renewables", "good look"];

var myHeaders = new Headers();

myHeaders.append("Content-Type", "application/json");

var raw = JSON.stringify({"text":text,"labels":labels});

var requestOptions = {

method: 'POST',

headers: myHeaders,

body: raw,

};

fetch("http://127.0.0.1:5000/get-labels", requestOptions)

.then(response =>

response.json()

)

.then(result => {

if (result == []){

console.log("self-promote");

displayAd(false, "#banner");

} else {

var scores = result['scores'];

var labels = result['labels'];

if (Math.max(...scores) >= 0.8) {

console.log("Ad success");

var indexOfMaxScore = scores.reduce((iMax, x, i, arr) => x > arr[iMax] ? i : iMax, 0);

displayAd(labels[indexOfMaxScore], "#banner");

} else {

displayAd(false, "#banner");

}

}

})

.catch(error => console.log('error', error));Note that we only display the client ad if the score is above 0.8:

Math.max(…scores) >= 0.8

Otherwise, we display self-promotion.

This is of course an arbitrary value, which can be tightened and loosened as needed.

News about renewable energy source fits PV cell ads.

News about the dangers in the house can increase the intention to buy

insurance.

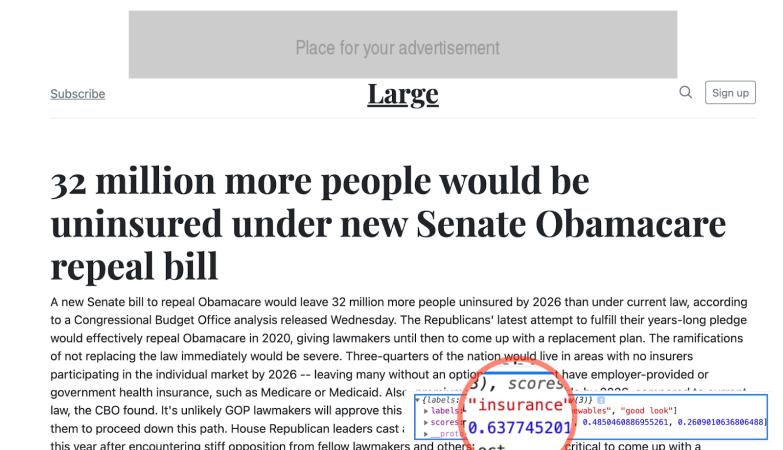

Although an ad about insurance would have been suitable for the article, it was

not displayed because the right level of relevancy was not achieved.

The careful reader will notice that the example of the hairdresser’s banner did not appear. This is because the subject matter of the articles is focused on serious world news, where fashion issues are not addressed. To be able to implement the campaign, you need to choose a different site or rethink your keyword strategy.

We can achieve fast page load thanks to this asynchronous function: fetch .

However, at the same time, the ad will only show after the labels have been downloaded. For this reason

and to reduce costs, it is best to implement some form of cache in a production environment.

An additional modification could be simply storing labels directly in the database. For infrequently updated articles, this certainly makes sense.

However, a solution based on a separate API, which we can feed to any text and get its labels, gives us the possibility to use JS code virtually on any page in near real-time, even without access to the backend!

The biggest challenge in using contextual targeting is using it on news websites. Many topics appear in the articles posted there, including those which are in line with the advertiser’s industry. But at the same time, the sensational, often sad overtones of the stories they contain are not a good place to advertise.

The text classification API by NLP Cloud, on the other hand, does a pretty good job of tagging texts, so we might as well repeat the whole process, this time keeping in mind to exclude texts with a given topic from having banners emitted on them (see the text classification API page)

Thank you for reading. I hope you enjoyed reading as much as I enjoyed writing this for you.