NLP Cloud is an API for natural language processing.

What Are Embeddings?

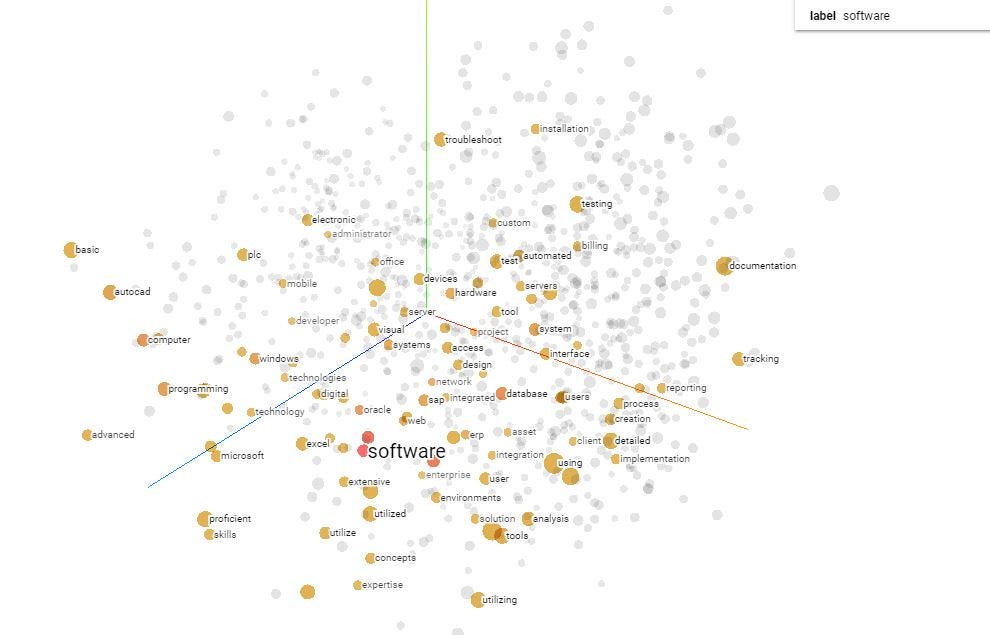

Embeddings are vector representations of pieces of texts. If 2 pieces of text have a similar vector representation, it most likely means that they have a similar meaning.

Imagine you have the 3 following sentences:

NLP Cloud proposes an API dedicated to NLP at scale.

I went to the cinema yesterday. It was great!

Here are the embeddings from the 3 above sentences (truncated for the sake of simplicity):

[[0.0927242711186409,-0.19866740703582764,-0.013638739474117756,-0.11876793205738068,0.011521861888468266,-0.03629707545042038, -0.030676838010549545,-0.03159608319401741,0.021390020847320557,0.03344911336898804,0.1698218137025833,-0.0009996045846492052, -0.07465217262506485,-0.21483412384986877,0.11283198744058609,0.03549865633249283,0.04985387250781059,-0.027558118104934692, 0.06297887861728668,0.09421529620885849,0.03700404614210129,0.06565431505441666,0.02284885197877884,0.06327767670154572, -0.09266531467437744,-0.014569456689059734,-0.06129194051027298,0.1818675994873047,0.09628438949584961,-0.09874546527862549, 0.030865425243973732, [...] ,-0.02097163535654545,0.021617714315652847,0.11045169830322266,0.01000999379903078,0.11451057344675064,0.18813028931617737, 0.007419265806674957,0.1630171686410904,0.21308083832263947,-0.03355317562818527,0.0778832957148552,0.2268853485584259,-0.13271427154541016, 0.005264544393867254,0.16081497073173523,0.09937280416488647,-0.12673905491828918,-0.12035898119211197,-0.06462062895298004, -0.0024213052820414305,0.08730605989694595,-0.04702030122280121,-0.03694896399974823,0.002265638206154108,-0.027780283242464066, -0.00017151003703474998,-0.20887477695941925,-0.2585527300834656,0.3124837279319763,0.05403835326433182,0.027094876393675804, -0.022925367578864098,0.038322173058986664]]

Embeddings are a core feature of Natural Language Processing because, once a machine is able to detect similarities between texts, it paves the ways for many interesting applications like semantic similarity, RAG (retrieval augmented generation) systems, semantic search, paraphrase detection, clustering, and more.