Create an Account

Sign up is very quick. Just visit the registration page and fill your email + password (register here).

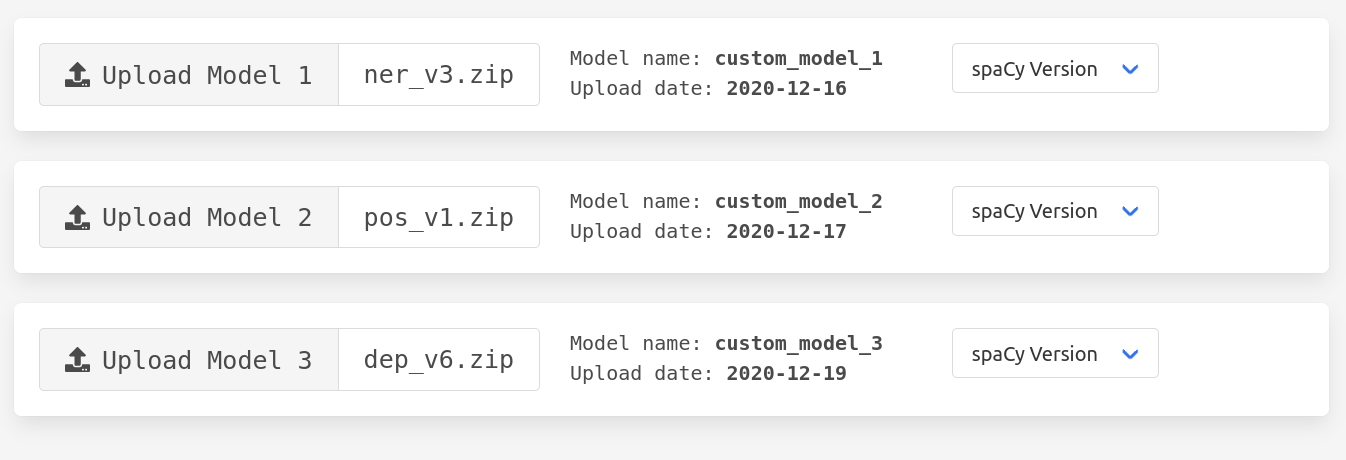

You are now in your dashboard and you can see your API token. Keep this token safely, you will need it for all the API calls you will make.

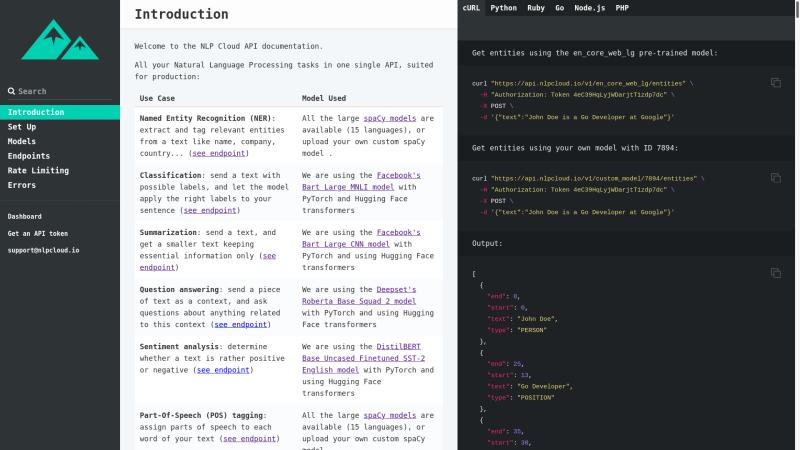

Several code snippets are provided in your dashboard in order for you to quickly get up to speed. For more details, you can then read the documentation (see the documentation here).