Usage Guidelines And Application Review

Before GPT-3, OpenAI used to release open-source AI models. GPT and GPT-2 were both open-source models that anyone could deploy and use as they pleased. Hence the word "Open" in "OpenAI". But when they created GPT-3, OpenAI decided to keep it as a black box only available through their paid API. Officially for ethical reasons.

Since then, open-source equivalents have been released like GPT-J and GPT-NeoX, and you can install them by yourself and use them as you please.

OpenAI are extremely restrictive about the kind of applications they allow. You can't integrate their API in production without submitting your application for validation first and they enforce very strict "usage guidelines". Here is an overview of their validation process.

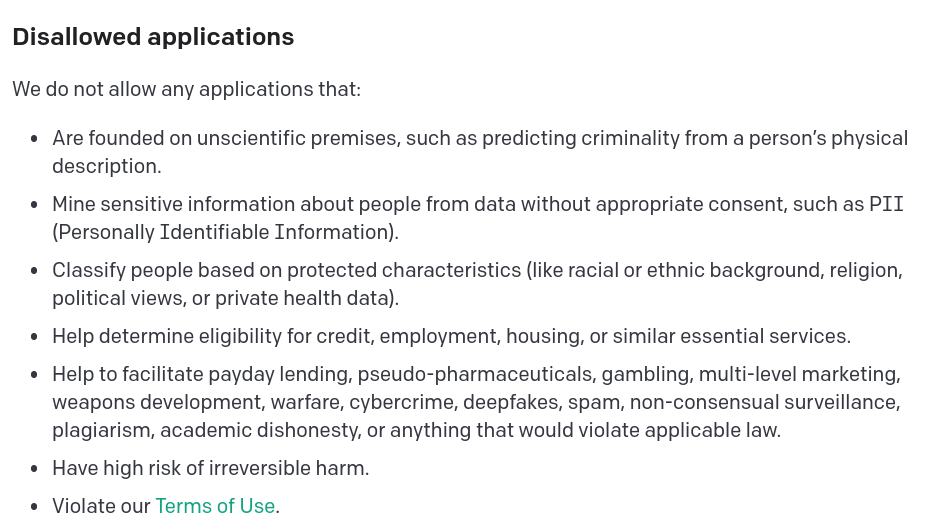

Some applications are simply not allowed by default, like applications based on "unscientific" premises, paraphrasing and rewriting applications (considered as "plagiarism"), multi-level marketing, and more. Here is a more detailed list from OpenAI's usage guidelines:

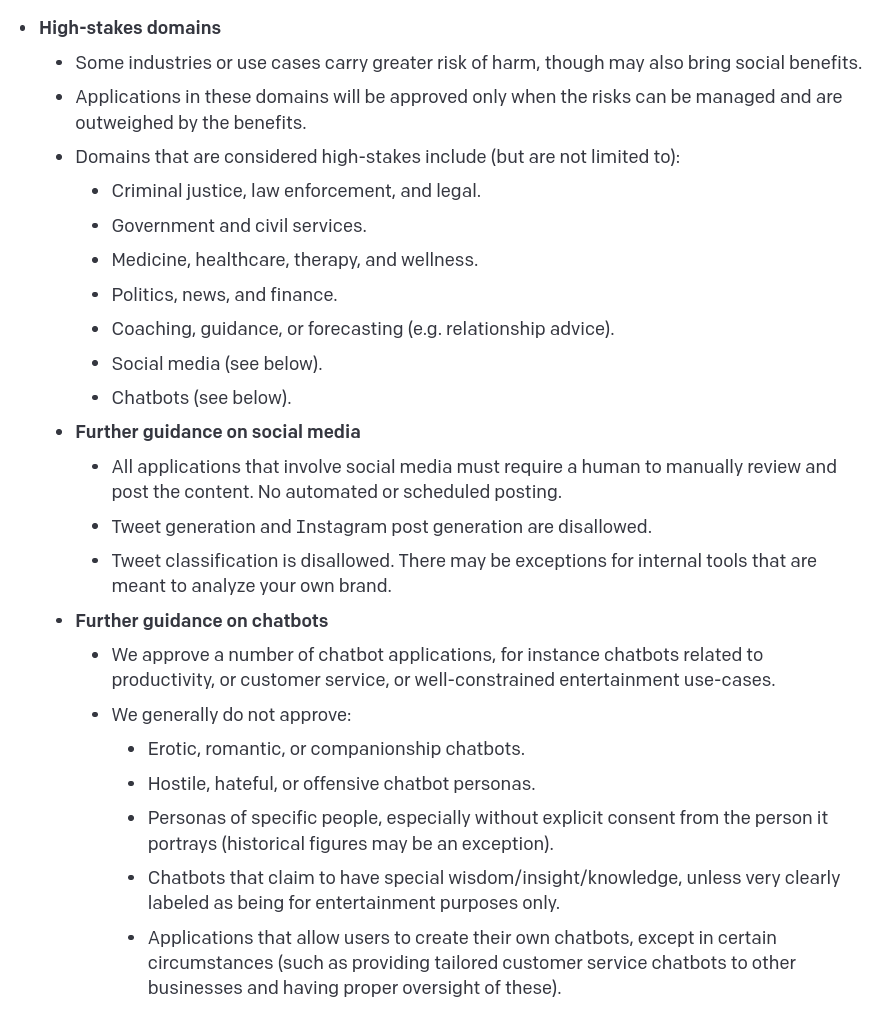

Additionally, many AI applications that you might have in mind are very likely to be rejected by OpenAI. For example you can't generate large content, which means that you can't use GPT-3 to write a whole blog article for you. Many chatbot use cases are rejected too. For example you can't build a chatbot that acts as a companion or a chatbot that uses insults or adult words. Your application is also very likely to be rejected if it is related to social media, healthcare, coaching, legal, and much more. Here are some extracts of OpenAI's guidelines about "high-stake" domains (applications considered as very sensitive that are very likely to be rejected) and about text length:

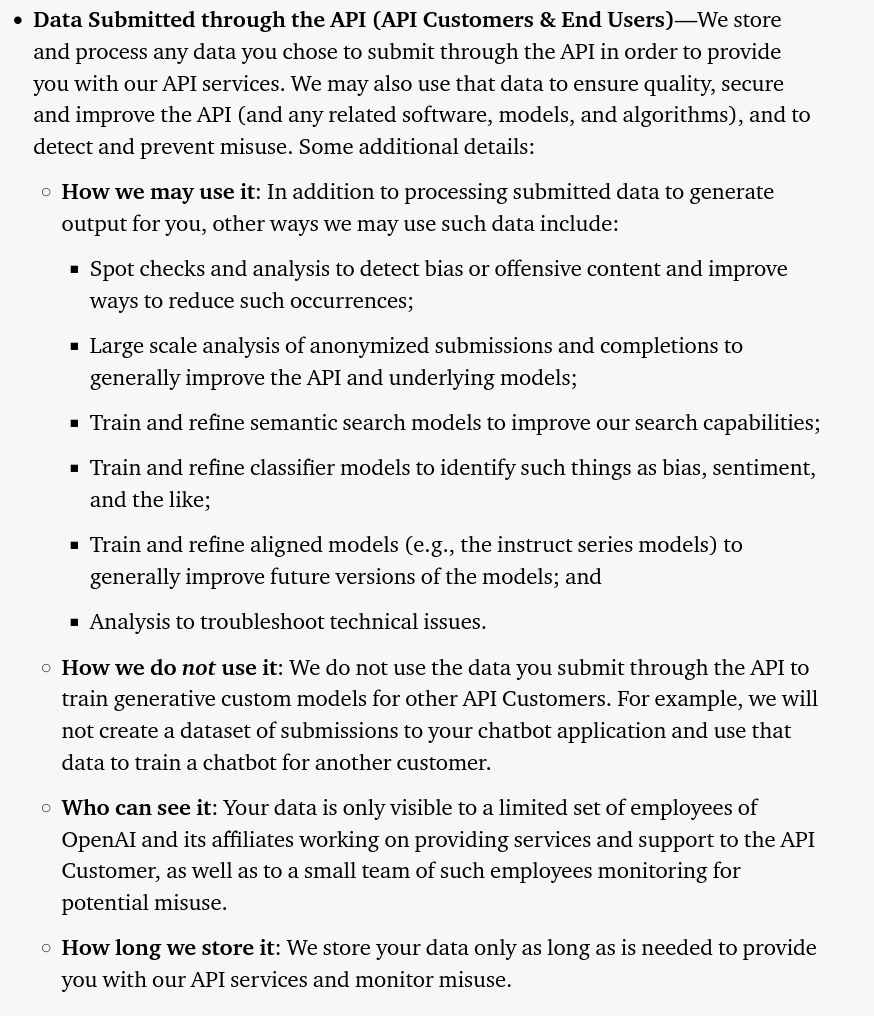

OpenAI ask you to implement a "user identifier" that is going to individually identify each end-user of your application. Based on this, rate limiting applies: end users can't make more than 60 requests per minute.

Many projects are simply aborted because of these strict limitations.

None of these restrictions are applied by NLP Cloud. You can use NLP Cloud for any kind of application without restrictions, and you can make as many requests as you want per end user without rate limiting (as long as you select the right plan of course).