Explore

The above examples may be very useful, but you can still do better by using some specific keywords. The Stability AI team recommends that you try some of the following keywords in your instructions:

Highly detailed, surrealism, trending on art station, triadic color scheme, smooth, sharp focus, matte, elegant, the most beautiful image ever seen, illustration, digital paint, dark, gloomy, octane render, 8k, 4k, washed colors, sharp, dramatic lighting, beautiful, post processing, picture of the day, ambient lighting, epic composition.

No doubt that you will discover special instructions that nobody never tried before you that create amazing results!

Also, feel free to create longer instructions. You don't necessarily have to stick to one sentence. You can use a whole paragraph instead for example.

If you need ideas, here are some interesting examples:

highly detailed futuristic Apple iGlass computer glasses on face of human, cyberpunk, hand tracking, concept art, character art, studio lightning, bright colors, intricate, masterpiece, photorealistic, hyperrealistic, sharp focus, high contrast, Artstation HQ, DeviantArt trending, 8k UHD, Unreal Engine 5

A detailed manga illustration character full body portrait of a dark haired cyborg anime man who has a red mechanical eye, trending on artstation, digital art, 4 k resolution, detailed, high quality, sharp focus, hq artwork, insane detail, concept art, character concept, character illustration, full body illustration, cinematic, dramatic lighting

a cyberpunk zulu warrior sitting on a cliff watching a meteor fall to earth from a distance, by alena aenami and android jones and greg rutkowski, Trending on artstation, hyperrealism, elegant, stylized, highly detailed digital art, 8k resolution, hd, global illumination, ray tracing, radiant light, volumetric lighting, detailed and intricate cyberpunk ghetto environment, rendered in octane, oil on canvas, wide angle, dynamic portrait

Machine god rebuilding itself, fantasy, d & d, intricate, detailed, whimsical, detailed, trending on artstation, trending on artstation, smooth

Old wise Monk guiding a Lost Soul through Limbo, in the Style of Tomer Hanuka and Atey Ghailan, vibrant colors, trending on artstation

paul bettany as angel with wings is covered in vines and flowers and moss and standing in front of a beautiful cottage, a digital painting by thomas canty and thomas kincade and ross tran, art nouveau, atmospheric lighting, trending on artstation

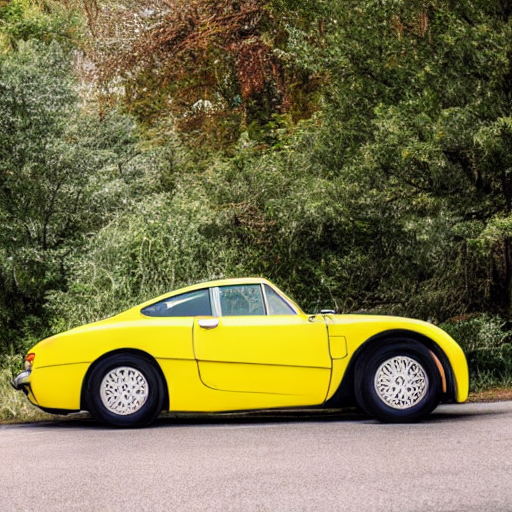

concept art for a car huge sharp spikes, painted by syd mead, high quality

Anxious good looking pale young Indian doctors wearing American clothes outside a hospital, portrait, elegant, intricate, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha

skull god, close - up portrait, powerfull, intricate, elegant, volumetric lighting, scenery, digital painting, highly detailed, artstation, sharp focus, illustration, concept art, ruan jia, steve mccurry

ukrainian girl with blue and yellow clothes near big ruined plane, concept art, trending on artstation, highly detailed, intricate, sharp focus, digital art, 8 k

terrifying unholy crying ghost, very detailed face, detailed features, fantasy, circuitry, explosion, dramatic, intricate, elegant, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by Gustave Dore, octane render

Beautiful and playful lady liberty portrait, art nouveau, fantasy, holding a vase by Rene Lalique , elegant, highly detailed, sharp focus, art by Artgerm and Greg Rutkowski and WLOP

a portrait of a woman that is a representation of argentinian culture, buenos aires, fantasy, intricate, highly detailed, digital painting, artstation, concept art, smooth, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha

Painting by Greg Rutkowski, at night a big ceramic jug with gold ornaments flies high in the night dark blue sky above a small white house under a thatched roof, stars in the sky, rich picturesque colors

pizza party at a theme park, light dust, magnificent, close up, details, sharp focus, elegant, highly detailed, illustration, by Jordan Grimmer and greg rutkowski and PiNe(パイネ) and 薯子Imoko and 香川悠作 and wlop and maya takamura, intricate, beautiful, Trending artstation, pixiv, digital Art

Studio photograph of hyperrealistic accurate portrait sculpture of timothy dalton, beautiful symmetrical!! face accurate face detailed face realistic proportions, made of pink frosted glass on a pedestal by ron mueck and matthew barney and greg rutkowski, hyperrealism cinematic lighting shocking detail 8 k